Why Nonprofits Should Run Essential Internet Services

from Citizenry Blog

Nonprofit organizations running online services may have a greater alignment of values and goals with their members compared to for-profit service providers. These organizations are typically mission-driven and focused on serving a specific community or social cause. This mission-driven focus can lead to a greater sense of trust and accountability among members. Additionally, because nonprofits don't have to generate profits for shareholders, they may be able to provide services at lower fees which can make the services more accessible to a wider range of members, particularly those with lower incomes or limited resources.

Nonprofits may also prioritize community engagement and involve members in decision-making processes. This can lead to a greater sense of ownership and engagement among members and a deeper understanding of their needs and the issues they face. Nonprofits have a longer-term focus on serving their members and communities, compared to for-profit service providers who may be more focused on short-term profits. This long-term focus can lead to more targeted and effective services that can have a positive social impact on the communities they serve.

Transparency is also an important aspect of nonprofit organizations. They are typically more transparent in their operations and decision-making processes, which can lead to greater trust and accountability among members. This transparency can also increase the level of trust between the organization and its members, as members can see how their contributions are being used and how decisions are being made.

Lastly, nonprofits have more flexibility in the way they can operate. They can take on more risk than for-profit companies and prioritize their mission over profits. This flexibility can allow nonprofits to be more innovative and adaptable in the way they operate, which can lead to more effective and efficient services. However, it's worth noting that not all nonprofit organizations are created equal, some may not have the same level of transparency, accountability, or resources as others and may not always be able to provide the same level of service as for-profit providers.

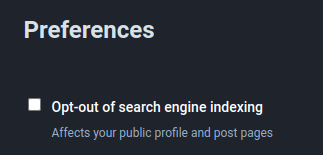

This is a blanket option that adds:

This is a blanket option that adds: