Blocking Googlebot for Mastodon and Other Sites

It is no secret that Google and other web crawlers are indexing Mastodon instances. This creates the issue of now your posts, username, profile and other identifiable information is being added to the indexes. If you host a site including Mastodon you may want to block these and other crawlers from some of all of your site(s).

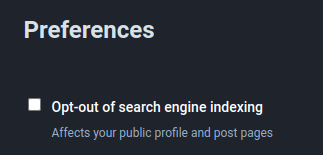

Check in Mastodon Admin > Preferences > Other

This is a blanket option that adds:

This is a blanket option that adds:

<meta content='noindex' name='robots'>

You may want more fine grained control with options below.

More ways to block the creepy crawlers:

Add to your robots.txt file:

http://www.robotstxt.org/robotstxt.html

http://www.robotstxt.org/db.html

Add meta robots tag(s):

<meta name=”robots” content=”index,follow”>

https://searchengineland.com/meta-robots-tag-101-blocking-spiders-cached-pages-more-10665

Add headers to Nginx to block crawlers:

add_header X-Robots-Tag "noindex, nofollow, nosnippet, noarchive";

Block via your WAF/Firewall: More advanced ways are to use a WAF or even iptables to block strings based on User Agents.

Example:

iptables -A INPUT -p tcp --dport 80 -m string --algo bm --string "Googlebot/2.1 (+http://www.google.com/bot.html)" -j DROP

https://developers.google.com/search/docs/crawling-indexing/overview-google-crawlers

Learn more: Citizenry.Technology